- Navigation

- Main Page

- Community portal

- Current events

- Recent changes

- Random page

- Help

This is pdfTeX, Version 3.1415926-2.3-1.40.12 (MiKTeX 2.9 64-bit) pdflatex: The memory dump file could not be found. Using Notepad, I then added the following two lines to the top of the IraAppliedStats.md file. When an application crashes, it will generally be caught by Dr. Watson and create a dump in the same location as the Drwtsn32.log file. This is the dump file we can open using the Debugging Tools for Windows. To do this, run Windbg.exe from the Debugging Tools folder. Click File – Open Crash Dump and browse to the.DMP file in question. I think the problem is the pdflatex engine has a trouble in displaying the small p-value in exponential notation. I solved the problem by clicking on the gear symbol next to the knit button, then under output options, advanced tab I changed the LaTeX Engine to lualatex, or you can just report the p-value as p. I receive the error: pdflatex not found when I try to convert a.tex or.md file to.pdf. I have downloaded MikTex and have the associated LaTex packages. These don't seem to include pdflatex, although I do see pdftex. Are these not interchangeable? Can anyone guide me in figuring this out?

- Toolbox

- Page information

- Permanent link

- Printable version

- Special pages

- Related changes

- What links here

- 1Frequently Asked Questions

- 1.1Problems Starting the Memory Analyzer

- 1.2Problems Getting Heap Dumps

- 1.4Problems Interpreting Results

- 1.7Extending Memory Analyzer

MemoryAnalyzer, Home Page, Forum

Pdflatex .exe The Memory Dump File Could Not Be Found File

Problems Starting the Memory Analyzer

java.lang.RuntimeException: No application id has been found.

The Memory Analyzer needs a Java 1.5 VM to run (of course, heap dumps from JDK 1.4.2_12 on are supported).If in doubt, provide the runtime VM on the command line:

MemoryAnalyzer.exe -vm <path/to/java5/bin>

Alternatively, edit the MemoryAnalyzer.ini to contain (on two lines):

-vm

path/to/java5/bin(This error happens because the MAT plug-in requires a JDK 1.5 via its manifest.mf file and the OSGi runtime dutifully does not activate the plug-in.)Memory Analyzer version 1.1 will give a better error message pop-up.

Incompatible JVM

Version 1.4.2 of the JVM is not suitable for this product. Version 1.5.0 or greater is required.

Out of Memory Error while Running the Memory Analyzer

Well, analyzing big heap dumps can also require more heap space. Give it some more memory (possible by running on a 64-bit machine):

MemoryAnalyzer.exe -vmargs -Xmx4g -XX:-UseGCOverheadLimit

Alternatively, edit the MemoryAnalyzer.ini to contain:

-Xmx2g

As a rough guide, Memory Analyzer itself needs 32 to 64 bytes for each object in the analyzed heap, so -Xmx2g might allow a heap dump containing 30 to 60 million objects to be analyzed. Memory Analyzer 1.3 using -Xmx58g has successfully analyzed a heap dump containing over 948 million objects.

The initial parse and generation of the dominator tree uses the most memory, so it can be useful to do the initial parse on a large machine, then copy the heap dump and index files to a more convenient machine for further analysis.

For more details, check out the section Running Eclipse in the Help Center. It also contains more details if you are running on Mac OS X.

If you are running the Memory Analyzer inside your Eclipse SDK, you need to edit the eclipse.ini file.

How to run on 64bit VM while the native SWT are 32bit

In short: if you run a 64bit VM, then all native parts also must be 64bit. But what if - like Motif on AIX - native SWT libraries are only available as 32bit version? One can still run the command line parsing on 64bit by executing the following command:

/usr/java5_64/jre/bin/java -jar plugins/org.eclipse.equinox.launcher_1*.jar -consoleLog -application org.eclipse.mat.api.parse path/to/dump.dmp.zip org.eclipse.mat.api:suspects org.eclipse.mat.api:overview org.eclipse.mat.api:top_componentsor the latest version of Memory Analyzer has this ParseHeapDump.sh script, which relies on having java in the path.

Using plugins/org.eclipse.equinox.launcher_1*.jar finds a version of the Equinox Launcher available in your installation without having to specify the exact name of the launcher file, as this version changes regularly!

The org.eclipse.mat.api:suspects argument creates a ZIP file containing the leak suspect report. This argument is optional.

The org.eclipse.mat.api:overview argument creates a ZIP file containing the overview report. This argument is optional.

The org.eclipse.mat.api:top_components argument creates a ZIP file containing the top components report. This argument is optional.

With Memory Analyzer 0.8, but not Memory Analyzer 1.0 or later, the IBM DTFJ adapter has to be initialized in advance. For parsing IBM dumps with the IBM DTFJ adapter you Memory Analyzer 0.8 should use this command:

/usr/java5_64/jre/bin/java -Dosgi.bundles=org.eclipse.mat.dtfj@4:start,org.eclipse.equinox.common@2:start,org.eclipse.update.configurator@3:start,org.eclipse.core.runtime@start -jar plugins/org.eclipse.equinox.launcher_*.jar -consoleLog -application org.eclipse.mat.api.parse path/to/mydump.dmp.zip org.eclipse.mat.api:suspects org.eclipse.mat.api:overview org.eclipse.mat.api:top_componentsProblems Getting Heap Dumps

Error: Found instance segment but expected class segment

This error indicates an inconsistent heap dump: The data in the heap dump is written in various segments. In this case, an address expected in a class segment is written into a instance segment.

The problem has been reported in heap dumps generated by jmap on Linux and Solaris operation systems and jdk1.5.0_13 and below. Solution: use latest jdk/jmap version or use jconsole to write the heap dump (needs jdk6).

Error: Invalid heap dump file. Unsupported segment type 0 at position XZY

This almost always means the heap dumps has not been written properly by the Virtual Machine. The Memory Analyzer is not able to read the heap dump.

If you are able to read the dump with other tools, please file a bug report. Using the HPROF options with #Enable_Debug_Output may help in debugging this problem.

Parser found N HPROF dumps in file X. Using dump index 0. See FAQ.

This warning message is printed to the log file, if the heap dump is written via the (obsolete and unstable) HPROF agent. The agent can write multiple heap dumps into one HPROF file. Memory Analyzer 1.2 and earlier has no UI support to decide which heap dump to read. By default, MAT takes the first heap dump. If you want to read an alternative dump, one has to start MAT with the system property MAT_HPROF_DUMP_NR=<index>.

Memory Analyzer 1.3 provides a dialog for the user to select the appropriate dump.

Enable Debug Output

To show debug output of MAT:

1. Create or append to the file '.options' in the eclipse main directory the lines:

Edit this file to remove some lines if you are not interested in output from a particular plug-in.

2. Start eclipse with the -debug option. This can be done by appending -debug to the eclipse.ini file in the same directory as the .options file.

3. Be sure to also enable the -consoleLog option to actually see the output.

4. If you want to enable debug output for the stand-alone Memory Analyzer create the options file in the mat directory and start memory analyzer using MemoryAnalyzer -debug -consoleLog

See FAQ_How_do_I_use_the_platform_debug_tracing_facility for a general explanation of how the debug trace works in Eclipse.

Problems Interpreting Results

MAT Does Not Show the Complete Heap

Symptom: When monitoring the memory usage interactively, the used heap size is much bigger than what MAT reports.

During the index creation, the Memory Analyzer removes unreachable objectsbecause the various garbage collector algorithms tend to leave some garbagebehind (if the object is too small, moving and re-assigning addresses is toexpensive). This should, however, be no more than 3 to 4 percent.If you want to know what objects are removed, enable debug output as explained here:MemoryAnalyzer/FAQ#Enable_Debug_Output

Another reason could be that the heap dump was not written properly. Especially older VM (1.4, 1.5) can have problems if the heap dump is written via jmap.

Otherwise, feel free to report a bug.

How to analyse unreachable objects

By default unreachable objects are removed from the heap dump while parsing and will not appear in class histogram, dominator tree, etc. Yet it is possible to open a histogram of unreachable objects. You can do it:

1. From the link on the Overview page

2. From the Query Browser via Java Basics --> Unreachable Objects Histogram

This histogram has no object graph behind it(unreachable objects are removed during the parsing of the heap dump, only class names are stored). Thus it is not possible to see e.g. a list of references for a particular unreachable object.

But there is a possibility to keep unreachable objects while parsing. For this you need to either:

- parse the heap dump from the command line providing the argument -keep_unreachable_objects, i.e.

ParseHeapDump.bat -keep_unreachable_objects <heap dump>

or

- set the preference using 'Window' > 'Preferences' > 'Memory Analyzer' > 'Keep Unreachable Objects', then parse the dump. Memory Analyzer version 1.1 and later has this preference page option to select keep_unreachable_objects.

Crashes on Linux

Depending on the type of crash, consider testing with one or more of these options in MemoryAnalyzer.ini:

- -Dorg.eclipse.swt.browser.XULRunnerPath=/usr/lib/xulrunner-compat/

- Normally you must first install your distribution's xulrunner-compat package

- -Dorg.eclipse.swt.browser.UseWebKitGTK=true

Extending Memory Analyzer

Is it possible to extend the Memory Analyzer to analyze the memory consumption of C or C++ programs?

No, this is not possible. The design of the Memory Analyzer is specific to Java heap dumps.

I previously was able to use this package, but now have no success. I am running MikTeX 2.9 and Winedt 7.0

The first file I have attached is the .sty file I am trying to use. I installed it by adding to the following directory: “C:Local TeX Filestexlatexmisc” and by adding “C:Local Tex Files” as a Root under MiKTeX Options (Admin) and then refreshed the FNDB and updated the formats.

The second file is the .text file I made from the following tutorial: http://jackson13.info/mla13/Documentation.pdf.

Finally, this is the error I am receiving:

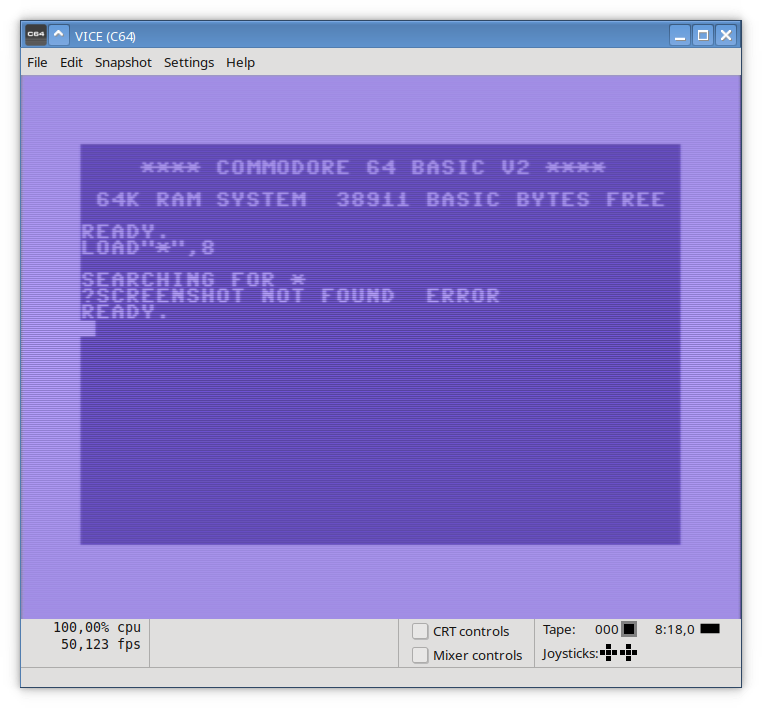

Pdflatex.exe The Memory Dump File Could Not Be Found

Command Line: texify.exe --pdf --tex-option=--interaction=errorstopmode --tex-option=--synctex=-1 'C:UsersIan McParlandDocumentsNew folderText.tex'

Startup Folder: C:UsersJohn DoeDocumentsNew folder

texify: pdflatex.exe failed for some reason (see log file).

Pdflatex .exe The Memory Dump File Could Not Be Found

I tried reinstalling the .sty but still have the same issue? What’s going on?